Streamz is built to take instructions from a user written driver file using the Streamz command "language". The user typically states fluid characterizations, specifies conversions among multiple characterisations and causes the program to convert quantities contained in stream files containing hundreds (or millions) of streams. A rich set of about 30 commands (primary keywords) are understood by Streamz (but not very well by users). The alphabetical list is given below. Each is explained, with examples on page linked to the command.

Primary Keywords Brief Description BIPS Enter a table of Binary Interaction Parameters CD Change Directory CHARACTERIZATION Name and enter a fluid characterization CLEAR Clear named filters and streams COMBINE Combine input streams into named streams COMPONENT Enter a table of properties for current characterization CONVERT Define a conversion procedure from a named to "current" characterization COPY Copy streams from input to output stream files DEFINE Define named filters DOMAIN Name domains and their types ECHO Turn on echoing of input (driver) files END Declare the end of current primary keyword EOF Declare the end of file EOS Declare the Equation of State for next characterization FILTER Define named filters GAMMAFILE Open and close files for Gamma distribution results INCLUDE Include files LUMP Create lumped fraction from defined components MIX Prepare named streams from other streams or components PROCESS Process a stream through a set of connected separators REDUCE Convert from molar streams to volumetric streams RESTORE Make a previously defined characterization "current" SEPARATOR Define a separator SPLITFILE Open and close split files STREAMFILE Open and close stream files TABS Define "tab" positions for current file TAG Add variables & values to named streams TABULATE Sum-up and tabulate variables while converting TITLE Define a boxed title TOTAL Sum named streams VARIABLE Name variables and their types WRITE Output named streams to stream file(s)

ALIAS Table: It is a list of Aliases of Primary Keywords and their Sub-Keywords.

(see also: ALIAS Table for alternative keywords)

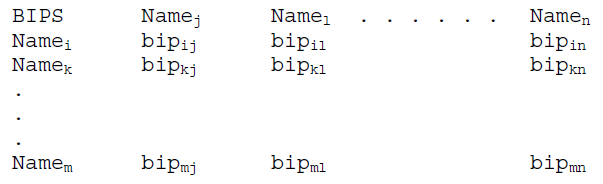

The BIPS keyword initiates the tabular input of the binary interaction parameters. The arguments (Name1 Name2 etc.) following this keyword are predefined component names (in any order). Following this command line, the program expects a formatted table containing the values of binary interaction parameters, where the values “line up” (see: line up procedure ) with their heading, as shown below:

Here, for example, Namei is the name of the component i, as specified in the property table, and bipij is the parameter for the binary interaction of components i and j. A blank line or the word END completes a binary interaction parameter table. Any of the table entries may be left blank (defaulting to a previously specified value, initially zero). Specifying a value for bipij will also specify the same value for bipij. Any attempt to specify a non-zero bipii will be ignored.

For associating components to a bipij value, the component name for i is taken as the first entry in the row, and the component name for j is found using the table line up procedure described here. This strategy allows the use of blanks within the table, wherever default entries are desired, without causing the rest of the table to become misaligned.

The entry of BIPS could be spread out into multiple tables, for example, if a single table was too wide for the user’s preference (or the editor’s).

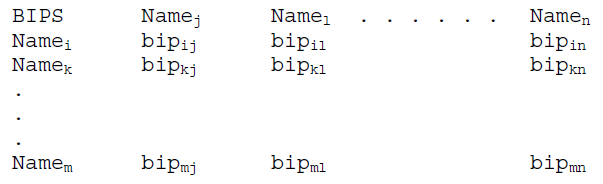

Example 1: Single BIPS table.

BIPS CIN2 CO2C2 C3-6 C7-9F1-2 F3-8 F9 CIN2 0.0000 5.74E-02 4.13E-04 2.72E-04 2.72E-04 2.72E-04 CO2C2 5.74E-02 0.0000 5.75E-02 4.79E-02 4.79E-02 4.79E-02 C3-6 4.13E-04 5.75E-02 0.0000 0.0000 0.0000 0.0000 C7-9F1-2 2.72E-04 4.79E-02 0.0000 0.0000 0.0000 0.0000 F3-8 2.72E-04 4.79E-02 0.0000 0.0000 0.0000 0.0000 F9 2.72E-04 4.79E-02 0.0000 0.0000 0.0000 0.0000 END

Example 2: Complete BIPS definition spread over multiple tables, with some entries in the last table left empty, and defaulting to 0.0.

BIPS N2 CO2 C1 C2 C3 IC4 C4 IC5 N2 0.0000 0.0000 0.0250 0.0100 0.0900 0.0950 0.0950 0.1000 CO2 0.0000 0.0000 0.1050 0.1300 0.1250 0.1200 0.1150 0.1150 C1 0.0250 0.1050 0.0000 0.8260 -0.1120 0.0265 0.0000 0.0000 C2 0.1000 0.1300 0.0826 0.0000 0.0130 0.0170 0.0000 0.0000 C3 0.0900 0.1250 -0.1120 0.0130 0.0000 0.0200 0.0000 0.0000 IC4 0.0950 0.1200 0.0265 0.0170 0.0200 0.0000 0.0149 0.0000 C4 0.0950 0.1150 0.0000 0.0000 0.0000 0.0149 0.0000 0.0129 IC5 0.1000 0.1150 0.0000 0.0000 0.0000 0.0000 0.0129 0.0000 BIPS N2 CO2 C1 C2 C3 IC4 C4 IC5 C6 0.1100 0.1150 0.0000 0.0120 F1 0.1100 0.1150 0.0014 0.0000 F2 0.1100 0.1150 0.0019 0.0000 F3 0.1100 0.1150 0.0027 0.0000 BIPS C5 C6 F1 F2 F3 N2 0.1100 0.1100 0.1100 0.1100 0.1100 CO2 0.1150 0.1150 0.1150 0.1150 0.1150 C1 0.0000 0.0000 0.0014 0.0019 0.0027 C2 C3 IC4 C4 IC5 0.0120 0.0000 0.0000 0.0000 C5 0.0000 0.0000 C6 0.0000 0.0037 0.0000 0.0000 F1 0.0037 0.0000 -0.1280 -0.2000 F2 0.0000 0.1280 0.0000 0.0000 F3 0.0000 0.2000 0.0000 0.0000

The EOS property table consists of a row of heading, a possible row of units, and rows of component values. A component value is associated with a heading according to its line up.

Columns are defined by headings in the first row of a table. Subsequent lines contain table entries or values that are associated with a column in the following logic:

b) If a heading is not intersected, move left on the heading row until a heading is intersected.

For the BIPS table and associating components to a kij value, the component name for i is taken as the first entry in the row, and the component name j is found using the table line up procedure described above.

Example of Table Line Up

The CD keyword allows the user to change the current directory for inclusion of files or to open files from certain directories, without the need to specify the path along with the name of file. This keyword provides the functionality of the change directory command of many OSes.

path_spec may include symbols typically used in “change directory” commands of supported OSes (Win9x/NT, DOS, Unix, Max OS) like,

“.” for current directory,

“. .” for parent directory,

“/” (or “\” or “:” depending on the OS) for directory name separators.

If the path_spec includes embedded spaces, it should be enclosed within quotes. Care should be taken while using these special symbols, as they may trigger errors if used on the wrong OS.

Example 1: Relative path specification on DOS/Windows

CD `..\..\InputFiles\BaseCase`

In this example the current directory is change to BaseCase under InputFiles, which is one level above the previous current directory.

Example 2: Absolute path specification on DOS/Windows

CD `D:\Data\BaseCase`

In this example the current directory is changed to BaseCase under Data, which is under the root directory on drive D.

Example 3: Absolute path specification on Unix

CD `~/Simulation/Sensitivity/EOS6`

In this example the current directory is changed to Sensitivity under Simulation, which is under the user’s login directory.

(see also: ALIAS Table for alternative keywords)

The CHARACTERIZATION keyword specifies a name for the characterization which is being described. The argument char_name makes it a named characterization. The actual description of the characterization is done using the CHARACTERIZATION and the BIPS keywords. The CHARACTERIZATION keyword makes the named characterization "current" and associates the property table (COMP keyword) and the binary interaction parameters (BIPS keyword) with it.

This named characterization is also used in a CONVERT keyword which defines the conversion between two characterizations. The RESTORE keyword can be used later in the same file to change back to a previously defined characterization if another characterization has been defined subsequently (see Example 2)

If the argument char_name includes embedded spaces, it should be enclosed within quotes (see Example 1). Otherwise, no quotes are required (see Example 2).

Example 1: Use of keyword; char_name includes embedded spaces

CHAR `EOS15 Gas Cap`

In this example, the EOS properties and BIPS that is supposed to be entered after this command are stored with the characterization name “EOS15 Gas Cap”.

Example 2: Use of alternate keywords (see ALIAS Table); No quotes needed for char_name since it does not include embedded spaces

CHARACTERIZATION EOS3Comp COMP MW C1 44 C4 78 C10 200 PROPERTIES AnotherChar COMP MW C1 44 C2 56 . . . . . . F3 256 F4 316 F5 478 RESTORE EOS3Comp

In the above example, a characterization named EOS3Comp is defined and then AnotherChar is defined making it the current characterization. To make EOS3Comp "current" again, a RESTORE command has to be used.

This keyword is used to CLEAR all the FILTERS and named STREAMS from the memory. When used with optional sub-keywords it can also CLEAR either one or the other. When filters are defined, each new filter is stored in memory, even if the new one has a name the same as a previous one. This is because the definition of the new filter itself may use the old one. In this way, each new use of the FILTER command stores the filter and its logic in memory. This way it is available for use in any command such as COPY, COMBINE etc.

Similarly, each use of the COMBINE (or TOTAL) command stores the named STREAMS and all its associated variables and amounts in memory. To generate proper output for some applications, like a reservoir simulator, the number of FILTER and COMBINE commands may run into hundreds. The processing of such streams may take unacceptable duration. To solve such problems, frequently used FILTER and COMBINE commands can be used repeatedly. To make such processing efficient, the un-required filters and/or streams may be erased from memory using the CLEAR command.

Example 1: Various uses of CLEAR command

. . . CLEAR Filters . . . CLEAR Streams . . . CLEAR

This example illustrates the various possible uses of the CLEAR keyword. It first clears all the filters, then clears all the streams in memory and then clears both.

(see also: ALIAS Table for alternative keywords)

COMBINE stream_nickname

[IF filter_name]

[WEIGHT [BY|OVER] var_spec [AND var_spec ]…]

[OVER var_spec [AND var_spec ]…]

[NORMALIZE]

[SCALE value]

This keyword allows the aggregation of streams satisfying filter criteria (or named filters using the FILTER command) into new named streams with the name stream_nickname. The COMBINE command creates a named stream by aggregating input streams based on named filters and other options. The first argument to this command is the name of the resulting combined stream. Multiple arguments can follow, each either a named filter or other manipulative option. A COMBINE command does not initiate writing to a file; this is accomplished with a corresponding WRITE command.

| Sub-Keywords | ALIAS |

| IF | |

| NORMALIZE | NORM |

| SCALING | SCALE, SCAL |

| AND | |

| WEIGHTING | WEIGHT, WEIGH |

| OVERING | OVER |

| WEIGHT OVER |

This keyword must be followed by the name of an earlier defined filter and results in the input stream being processed through it before being converted and combined. Only a single IF with its associated filter name is expected. If multiple occurrences are encountered only the last one will be used.

This keyword results in conversion of amounts of the output stream into fractions. If the input stream is in molar quantities, the output will be in molar fractions.

SCALING multiplies the output streams by a factor. This can be used for conversion from one set of units (e.g. moles/day) to another (moles/hour) if the output streams are required in a particular unit by the program using it. If SCALE 100 is used with the NORMALIZE command, the output streams are written in percentage instead of fractions.

It is used to specify multiple var_spec to the WEIGHT and OVER options. var_spec is the name of a numerical variable or domain, followed by its desired units, if applicable.

The WEIGHT option to the COMBINE command applies a product of the weigh variables to each converted stream after normalization (if requested). Each stream is then summed and multiplied by the value of SCALE (if used) to give the final stream. The argument to this command is one or more variables or domains (including units if applicable). Multiple weigh variables must be separated by the AND keyword. An optional BY keyword is allowed if the name of the weigh variable happens to be ‘over’.

The OVER option to the COMBINE command divides the converted and normalized (if requested) stream by a summation of the individual stream which have been multiplied individually by the product of the over variables. The argument to this command is one or more numeric variables or domains (including units if applicable). Multiple over variables must be separated by the AND keyword.

It is also possible to use the OVER keyword in conjunction with the WEIGHT keyword to mean a combined WEIGHT OVER options using the same variables for both options. This is a typical usage of these options when portions of the original stream are affected due to use of the FILTER command. The use of the WEIGHT OVER options in the COMBINE command needs to be explained in detail. The converted and normalized (if requested) streams are combined by the formula:

Final_Stream = Scale*Sum(Product(Weight-Vars)*Stream)/Sum(Product(Over- Vars))

Division by zero will never occur. If the denominator turns out to be zero, the final stream will be set to zero.

A "Weight-Var" is the value of a variable or the size of a domain (upper variable minus lower variable) requested by the WEIGHT option. The values or sizes are calculated in their requested units (if applicable) before any variables might be altered by filtering. This allows rates to be converted correctly to cumulative amounts, for example. Any undefined Weight-Var is assumed to be zero.

An "Over-Var" is the value of a variable or the size of a domain (upper variable minus lower variable) requested by the OVER option. Variable values are calculated in their requested units (if applicable) before any variables might be altered by filtering, but domain sizes are calculated in their requested units (if applicable) after the COMBINE command's filter has altered any necessary variables. This allows cumulative amounts to be converted correctly to rates, for example. Any undefined Over-Var is assumed to be zero.

Example 1: Simple use of COMBINE with single FILTER

COMBINE YEAR1: IF YEAR1

In this example, a named stream called “YEAR1” is created in memory. All streams satisfying the previous defined filter, also called “YEAR1” are converted and added to this named stream. The formula reduces to:

Final_Stream = Sum(Stream)

Example 2: Advanced use of COMBINE with FILTER, DEFINE, and WEIGHT OVER.

DOMAIN TIME T1 T2 DEFINE T1 0.0 DEFINE T2 1.0 FILTER YEAR: TIME GE ?T1? YEARS AND TIME LE ?T2? YEARS COMBINE YEARS_?T1?-?T2? IF YEAR, WEIGHTING OVER TIME (DAYS)

This example assumes that "TIME" variables 'T1' and 'T2' are associated with each stream. It declares a DOMAIN called “TIME” made up of 'T1' and 'T2'. It then associates the wildcards 'T1' and 'T2' with the string 0.0 and 1.0 respectively. A filter called “YEAR” is defined with the criteria as the interval 0.0-1.0 years. A named stream called “YEARS_0.0-1.0” is created in memory. All streams satisfying the previous defined filter called “YEAR1” are converted and added to this named stream. Before adding, the domain "TIME"for the “current” input stream (i.e. T2 –T1 for this stream) is calculated and multiplies each stream quantity. After adding, the requested domain (i.e. the domain of the resulting stream) is calculated and divides the summed stream. This gives us back rates after starting with rates. The formula applied is:

Final_Stream = Sum(TIME domain for each stream before filtering) *Stream) / Sum(TIME domain after filtering)

Example 3: Weighted sum of streams

COMBINE SUMMED WEIGHTING BY FACTOR

This example assumes that a real variable “FACTOR” is associated with each stream. The streams themselves are in different molar units known to the user and the value of “FACTOR” reflects the unit conversion to a consistent set. The COMBINE command creates a total stream named “SUMMED”, converting the units to a consistent set. It is basically doing a weighted sum. The formula reduces to:

Final_Stream = Sum(Factor*Stream)

Example 4: Simple arithmatic average of streams

VARIABLE ONE INT SET ONE = 1 COMBINE AVE WEIGHTING OVER ONE

This example declares an Integer Variable named “ONE” and SETs its value to 1 (any constant would do for this purpose). The COMBINE command creates an averaged stream named “AVE”, The WEIGH-vars and OVER-vars cancel out resulting in a simple arithmetic average. The formula reduces to:

Final_Stream = Sum(1*Stream)/Sum(1)

(see also: ALIAS Table for alternative keyword)

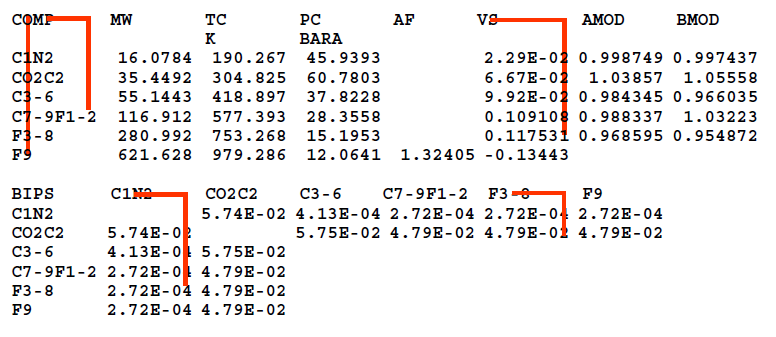

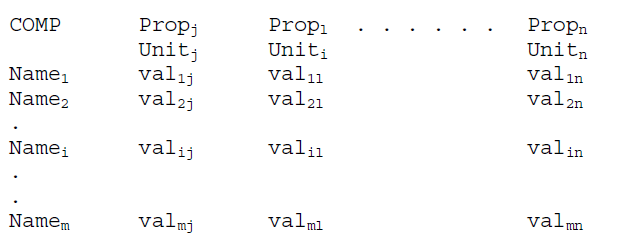

The COMPONENT primary keyword triggers tabular input of the full EOS property table that makes up the current characterization. The arguments (prop1 prop2 etc.) following this keyword are predefined critical properties for this characterization (in any order). This tabular input scheme is very flexible, allowing any of the component properties to be input in any order. Each property is identified by the heading keyword. For example, MW indicates molecular weight. The only constraint of the tabular input scheme is that any entries in the table that belong to a particular heading (i.e. property) should line up with the heading. This allows unknown entries to be left blank without misaligning the rest of the table.

The name of each component is listed under the COMPONENT heading. The value of any other property corresponding to that component is entered in the same row, lined up under the heading corresponding to the desired property. This input scheme is designed to enable cut-and-paste from any other data file or spreadsheet with minimal editing.

A possible row of units is allowed in the EOS property table immediately under the headings. If present, the units in that row are associated with the headings using the same line up procedure.

Here, for example, Namei is the name of component i, as specified in the property table, and valij is the value (entry) for the jth property of component i (identified by the heading Propj). A blank line or the word END completes a property table. Any of the table entries may be left blank (defaulting to a previously specified value, initially zero).

The entry of property tables could be spread out into multiple tables, for example if a single table was too wide for the user’s preference (or the editor’s).

Sub-Keywords ALIAS Brief Description MW Average Molecular Weight SG Specific Gravity TB Boiling Temperature LMW Lower Molecular Weight LSG Lower Specific gravity LTB Lower Boilling Temperature UMW Upper Molecular Weight USG Upper Specific Gravity UTB Upper Boiling Temperature TC TCR Critical Temperature PC PCR Critical Pressure ZC ZCR Critical Compressibility Factor VC VCR Critical Volume AF Acentric Factor VT VS Volume Translation AMOD A-parameter Modifier BMOD B-parameter Modifier VISZ VZ, ZCV Viscosity Z-factor PCHOR PARA Parachor Property FULL Full Name of Component END End of the Table ? or _ or ~ A comment to ignore a column

This is the average molecular weight property of a component. No units are associated with this property. The molecular weight of all the component as heavy as, or heavier than, the component specified to participate in Gamma distribution modeling, must be entered. Also, the input and output units (see CONVERT keyword) of MASS and MOLEs are considered compatible and internally convertible only if the MW property of the components are entered.

This is the specific gravity of a component. No units are associated with this property.

This is the boiling temperature of the component. The units of this property may be in any one of accepted temperature Units.

This is the lower molecular weight of the component. When discrete cuts of components (or fractions) are used in a characterization, this property identifies the lower bound of the cut-off molecular weight for the fraction. No units are associated with this property. This is particularly useful for specifying the bounds for discretization of a Gamma distribution model into ouput components. Specifying the average molecular weights (MW) in such cases, results in the bounds being average of them, thus giving rise to material balance errors.

This is the lower specific gravity of the component. When discrete cuts of components (or fractions) are used in a characterization, this property identifies the lower bound of the cut-off specific gravity for the fraction. No units are associated with this property.

This is the lower boiling temperature of the component. When discrete cuts of components (or fractions) are used in a characterization, this property identifies the lower bound of the cut-off boiling temperature for the fraction. The units of this property may be in any one of the accepted temperature Units.

This is the upper molecular weight of the component. When discrete cuts of components (or fractions) are used in a characterization, this property identifies the upper bound of the cut-off molecular weight for the fraction. No units are associated with this property. This is particularly useful for specifying the bounds for discretization of a Gamma distribution model into ouput components. Specifying the average molecular weights (MW) in such cases, results in the bounds being average of them, thus giving rise to material balance errors.

This is the upper specific gravity of the component. When discrete cuts of components (or fractions) are used in a characterization, this property identifies the upper bound of the cut-off specific gravity for the fraction. No units are associated with this property.

This is the upper boiling temperature of the component. When discrete cuts of components (or fractions) are used in a characterization, this property identifies the upper bound of the cut-off boiling temperature for the fraction. The units of this property may be in any one of the accepted temperature Units.

This is the critical temperature of the component. The units of this property may be in any one of the accepted temperature Units.

This is the critical pressure of the component. The units of this property may be in any one of the accepted pressure Units.

This is the critical compressibility factor (Z-factor) of the component. No units are associated with this property.

This is the critical volume of the component. Units of molar volume are acceptable using the acceptable units of volume and moles. These are listed under the the REDUCE keyword. An example would be M3/KGMOL.

This is the acentric factor of the component. It gives a measure of the steepness of the vapor pressure curve of pure components. No units are associated with this property.

This is the volume translation property of the component. Specifically, this is the dimensionless volume correction (si = ci/bi) used to improve the volume (density) prediction of 2-parameter Cubic EOSes, without affecting their VLE predictions, since it does not affect equilibrium calculations for pure components and mixtures. No units are associated with this property.

This is the A-parameter modifier of the component. It is a component dependent correction term for the EOS parameter A, to improve VLE predictions. No units are associated with this property

This is the B-parameter modifier of the component. It is a component dependent correction term for the EOS parameter B, to improve VLE predictions. No units are associated with this property.

This is the Viscosity Z-factor of the component. No units are associated with this property.

This is the parachor property of the component. It is a temperature independent parameter which is calculated experimentally and is proportional to the molecular weight of the component. It is used to calculate oil/gas interfacial tensions of complex hydrocarbon mixtures. No units are associated with this property.

This is the full name property of the component. No units are associated with this property. This property allows names including embedded spaces to be associated with a component (e.g. 'Carbon Dioxide'). This also allows remarks to be associated with components. Currently this is used to implement propriety names for an in-house process simulator.

This is sub-keyword signifies the end of the table of EOS properties.

Use of any one of these 3 special characters (_,?,~) directly in front of a table heading signifies a column comment. It basically results in the particular column (the heading and the respective 'lined up' data) being ignored by the program. This is useful if a user wants to temporarily not use some property of the characterization but retain it for reference only. Or if he/she wants to have 2 sets of a property, and use one while disabling the other.

The temperature and pressure units recognized in the EOS property table (also throughout the program) are:

Units ALIAS Description R RANKINE, RAN Rankine K KELVIN, KEL Kelvin F FAHRENHEIT, FAH Degree Fahrenheit C CELCIUS, CEL Degree Celcius ATMA ATM Atmospheres (absolute) ATMG Atmospheres (gauge) PSIA PSI Pounds per square inch (absolute) PSIG Pounds per square inch (gauge) BARA BAR Bar (absolute) BARG Bar (gauge) KPAA KPA Kilo Pascal (absolute) KPAG Kilo Pascal (gauge) MPAA MPA Mega Pascal (absolute) MPAG Mega Pascal (gauge) TORRA TORR Torr (absolute) TORRG Torr (gauge)

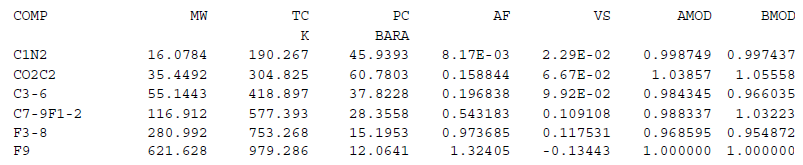

Example 1: Single property table, with units row.

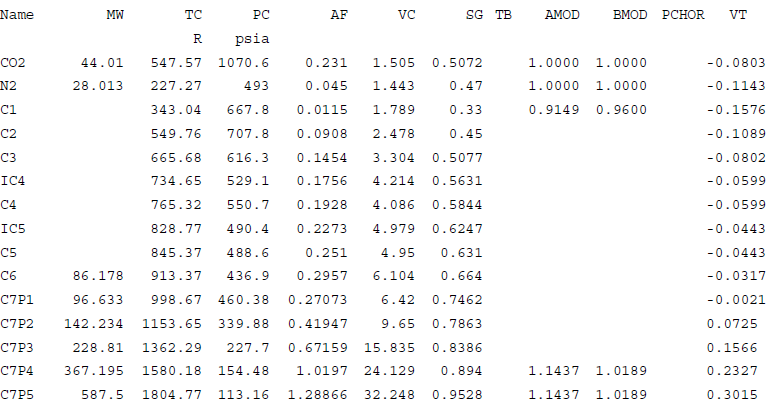

Example 2: Complete EOS property table with some entries left empty.

(see also: ALIAS Table for alternative keywords)

[WARNING [ON|OFF]]

[GAMMA inp_comp out_comp[FILE gamfil_nickname]

[IGNORE inp_comp]

[WEIGH inp_comp | AVERAGE|TOT [ w ] ]

[SHAPE [ parm_ini | parm_max | parm_min ]]

[BOUND [ parm_ini | parm_max | parm_min ]]

[AVERAGE [ parm_ini | parm_max | parm_min ]]

[ORIGIN | ZERO [ parm_ini | parm_max| parm_min ]] ][SET var_name var_value [ var_name var_value ]...]

[SPLITS splfil_nickname ]

[SPLIT | DELUMP in_comp [ out_comp spl_fctr [ out_comp spl_fctr ]...]]

This primary keyword defines completely the procedure to be used for conversions between a specified and the “current” characterizations, the units of input and output streams, and the quantity to be conserved in the conversion (if applicable).

Currently two procedures are available: 1) Gamma, and 2) Split.

The Gamma method is specified using the GAMMA sub-keyword. This method uses the Gamma distribution procedure to first fit the input stream to the Gamma model and then to calculate the amounts in the output stream corresponding to this calculated model.

The second method is to supply a set of split factors using the SPLIT sub-keyword. A combination of the two methods is also possible. This is usually the most typical procedure as the heptanes plus (C7+) components are best converted using the Gamma distribution and the hexanes minus (C6-) components are converted using split factors.

The first argument to the CONVERT keyword is the name of a defined characterization. The CONVERT command requires its first line to be of the form:

CONVERT input_char FROM in_units TO out_units CONSERVE cons_units

The above options must appear on the same line as the CONVERT keyword, and no other sub-keywords may appear on that line.

| Sub-Keywords | ALIAS | Sub-Commands or Units Under Sub-Keyword | ALIAS |

| FROM | |||

| MASS | |||

| MOLES | |||

| VOLUME | |||

| AMOUNT | |||

| TO | |||

| MASS | |||

| MOLES | |||

| VOLUME | |||

| AMOUNT | |||

| CONSERVE | CONSERV , CON | ||

| WARNING | WARN | ||

| GAMMA | |||

| FILE | |||

| IGNORE | IGNOR | ||

| WEIGH | |||

| SHAPE | |||

| BOUNDARY | BOUND | ||

| AVERAGE | AVE, TOT | ||

| ORIGIN | ORIG , ZERO | ||

| SPLIT | DELUMP, LUMP | ||

| SET | |||

| SPLITS |

The FROM option defines the input units expected by the conversion procedure. Conversion will be possible only for input streams specified in these (or compatible) units. Four units are currently understood, namely MASS, MOLES, VOLUME, and AMOUNT. The FROM units should match those in the corresponding input stream files (MASS and MOLES are compatible if the component molecular weights have been defined). The actual streams may be in more specific, dimensional units, which may also denote rates, concentrations, fluxes, etc. For example, kgmol, lbmol/day, gmole/cc, or lbmol/ft2/sec would all fall under the category of MOLES. The true, dimensional units are not relevant to the program, but should be kept track of by the user, so as not to confuse lbmol/day with kgmol/hr, for example. A CONSERVE option to the CONVERT command will be discussed later in this section. If the FROM units are not specified, they will default to the TO units, the CONSERVEunits, or MOLE, in that order of preference.

The TO option specifies the units of the output streams. If the TO unit has not been specified, it will default to the FROM units. Similar to the FROM option, four units are currently understood for the TO option too, namely MASS, MOLES, VOLUME, and AMOUNT.

The CONSERVING or CONSERVE option allows the user to specify the quantities to conserve during the conversion. Use of the CONSERVE option has two effects. First, the SPLIT factors will be checked for possible material balance errors; if they will not conserve the requested quantities, warnings will be issued. Second, it determines the way GAMMA distribution modeling is performed. Gamma modeling can CONSERVE either MOLES or MASS, but generally not both. Moles are conserved by default, but the option to conserve MASS can be used instead. Any combination of FROM, TO, and conserved units may be specified, but the CONSERVE option has an effect only if the conserved units are compatible with both the FROM and TO units. Otherwise, it is ignored.

The WARNING option allows the user to turn off warnings when the program checks for unspecified split factors and split factors which do not conserve mass or moles. This is particularly useful when the user intentionally does not enter split factors. The argument to this option is either ON or OFF, with ON being the default.

This Sub-keyword specifies the method of conversion between the components of the input characterization and the components of the output characterization. It expects two mandatory arguments in the form of the names of one component each of the input and output characterizations (in_comp and out_comp). These are the lightest components in each which are requested to participate in the Gamma modeling. The molecular weights and the amounts of all input components as heavy as, or heavier than that specified, would be used to calculate a gamma distribution model. The model and the molecular weights of all output components as heavy as, or heavier than that specified, would then be used to calculate the amounts of the output stream. The Sub-commands within the context of GAMMA sub-keywords are described below.

FILE: The FILE sub-command to GAMMA specifies the nickname of the file where Gamma modeling results will be written. This nickname must have been previously defined using the GAMMAFILE primary keyword.

IGNORE: The IGNORE sub-command to GAMMA instructs the program not to include suspect data for Gamma modeling. The argument in_comp specifies the first component, including it and subsequent ones, for which the amount data will be ignored. By default the molecular weight of these components will also be weighted to 0.

WEIGHT: A new WEIGHTING optional sub-command to the GAMMA sub-keyword of the CONVERT primary keyword controls the weighting of the molecular weight data during regression on the Gamma parameters. The argument input_comp is the name of a component within the input "plus" fraction and w is the desired value of the weight factor for the specified component's molecular weight. If w is not specified, a value of 1 will be assumed. Once a WEIGHTING sub-command is used, an input_comp or the AVERAGE sub-command must be supplied as its argument and must appear on the same line. Multiple WEIGHTING commands can be issued.

The calculation of the model is essentially the determination of the four model parameters by means of regression. The user has control over the regression by specifying the starting values and the upper & lower bounds of these parameters. The four model parameters are specified by optional sub-commands known within the context of the GAMMA sub-keyword. These are described below.

SHAPE: The molar distribution has an average MW and the function has a particular shape, which can

(a) decay exponentially from a finite value at the origin MW; the SHAPE parameter is 1,

(b) decay faster than exponentially from an infinite value at the origin MW; the SHAPE parameters are less than 1 (typically no less than 0.4),

(c) decay slower than exponentially after rising from zero at the origin MW and going through a maximum; the SHAPE parameters are greater than 1 (typically no greater than 5).

Up to 3 arguments can follow SHAPE, the maximum among them being its specified bound, the lowest being its specified lower bound, and the first being its initial value. The default and limiting values are given in the table below.

BOUNDARY: The model’s boundary MW is given by the product of the BOUNDARY parameter (generally between 0 and 1) and the MW of the input component specified as the first argument to the GAMMA sub-keyword. To allow flexibility, the actual boundary MW can be entered instead of multipliers as arguments to BOUNDARY. If the maximum of the 3 arguments is less than or equal to 1, they are interpreted as multipliers, otherwise as actual molecular weights.

AVERAGE: The model’s average MW is given by the product of the AVERAGE parameter (typically around 1) and the calculated average MW of the portion of the input stream being modeled. To allow flexibility, the actual model MW can be entered instead of multipliers as arguments to AVERAGE. If the maximum of the 3 arguments is less than the molecular weight of the first component participating in the Gamma modeling, they are interpreted as multipliers, otherwise as actual molecular weights.

ORIGIN: The model’s origin MW is given by the product of the ORIGIN parameter (between 0 and 1) and the model’s boundary MW. Up to 3 arguments can follow ORIGIN, the maximum among them being its specified upper bound, the lowest being its specified lower bound, and the first being its initial value. The default and limiting values are given in the table below. To avoid the origin MW from accidentally exceeding the bounding MW during regression, the origin MW can only be entered as multipliers.

Table: Various values for the 4 Gamma parameters.

Parameter Minimum Value allowed Maximum value allowed Default initial value Default lower bound Default upper bound SHAPE 0.05 20.0 1.0 0.4 5.0 BOUNDARY 0.0 1.0 0.9 0.5 1.0 AVERAGE 0.05 20.0 1.0 0.8 1.2 ORIGIN 0.0 1.0 0.7 0.0 1.0

The SPLIT (or its aliases DELUMP and LUMP) sub-keyword is one of the two methods Streamz uses to convert streams (the other being GAMMA distribution modeling). This specifies the split factors for conversion of a single input component to one or many output components. A split factor is the fraction of a component in the input stream that goes into a specified component of the output stream. The first argument to this keyword is always the name of the input component (input_comp). That’s followed by a series of doublets (output_comp spl_fctr), each consisting of an output component name and its split factor, in either order. Either element of each doublet may be omitted, however. If the component is omitted, it defaults to the one following that of the previous doublet (with the first doublet defaulting to the first component). If a split factor is omitted, it defaults to 1. The doublets continue until a keyword that is not a component name is encountered. Since each SPLIT command defines the splitting of a single input component, there would be typically that many SPLIT commands as there are components in the input characterization (minus those covered by the GAMMA command). An input component not covered by either the GAMMA or the SPLIT commands would get lost from the stream during the conversion.

The LUMP alias allows a more intuitive sub-keyword when many input components are being lumped into a single output component (a very typical usage). This sub-keyword should not be confused with the similarly named primary keyword LUMP (and will not conflict with it). The user should, however, be careful not to try issuing a primary LUMP command immediately following a CONVERT command that doesn't conclude with an END statement, but otherwise there should be no conflicts.

The SET sub-keyword is known within the context of the CONVERT command and is used to specify control variables and set their values. Variables of pressure, temperature, time or distance need to be assigned units when their values are set. The set of split factors become piecewise linear functions of the set variable. If one specifies multiple control variables, the split factors become piecewise linear functions of those variables as well. The first argument to the SET sub-keyword is the name of the primary control variable, followed by its value and units (if applicable), in either order. Additional control variables, along with their values and units, could be given as additional arguments, as long as only one input line is used for the entire set of control variables. If the split factors are known to be constant, there is no need for the SET sub-keyword.

The SPLITS sub-keyword is known within the context of the CONVERT command and is used to specify a file nickname to for writing out split factors. These split factors are calculated by Streamz, either by interpolation based on the values of the control variables, or during the Gamma distribution modeling (or both). A split factor table is written out for each stream, in a format that can easily be used in a Streamz Input file. A separate SPLITFILE primary keyword would first need to be used, however, to open and associate an actual file to this nickname.

Example 1: Simple use of CONVERT, with the SPLIT sub-keyword.

RESTORE ‘EOS8’ CONVERT EOS6 SPLITS SPL SPLIT C1N2 C1N2 SPLIT C2C02 C2 .95 CO2 .05 SPLIT C3-6 C3-6 SPLIT C7+1 C7+1_1 .5 C7+1_2 .5 SPLIT C7+2 C7+2 SPLIT C7+3 C7+3

This example:1 first RESTOREs the CHARACTERIZATION named ‘EOS8’ and makes it “current”. The CONVERT command then specifies a conversion from a characterization named ‘EOS6’ to the current (i.e. ‘EOS8’). No input, output or conserving units are mentioned hence they will all default to MOLES based on the rules specified earlier. The absence of any SET command indicates that the splitting is constant. The SPLITS sub-keyword instructs to write out all split factors to a file nicknamed “SPL”. The SPLIT sub-keyword specify that the conversion should use split factors for partitioning of input components into output components. All input components, except C2CO2 and C7+1, partition fully into single output components. This is due to the absence of any spl_fct as part of the doublet following the input_comp for these components (thus defaulting to 1). 95% of C2CO2 would partition into C2, and 5% into CO2 of the output characterization. Similarly C7+1 partitions 50-50 into C7+1_1 and C7+1_2 of the output characterization.

Example 2: Simple use of CONVERT, with the GAMMA sub-keyword.

CONVERT EOS6, FROM MOLES TO MOLES, CONSERVING MASS GAMMA INF1 OUTF1

This example:2 first assumes the “current” characterization is the one the user wants to convert ‘EOS6’ to. Options specify that the input streams should be in MOLES and the output streams written to output files would be in MOLES too. The option CONSERVING MASS ensures that mass of each stream would be conserved when it is fit to the Gamma distribution model. GAMMA instructs the program to use gamma distribution for the conversion. The first argument to this command, INF1, instructs the program to use this and heavier (MW-wise) input components to fit to the model. Even if the input characterization did not list its components in increasing order of molecular weights, the program does this internally and selects the correct components. The molecular weights and molar amounts of these selected components would be used to calculate model parameters. Since no parameters or their parm_ini, parm_max, or parm_min are listed, the program will use default values of all and perform regression to determine the values that give the best fit (in a least square sense). The program will than select OUTF1 and heavier output components (MW-wise), and use their entered molecular weights and the model parameters to calculate molar amounts for each, while conserving the overall MASS of the stream. Even though the input and output streams are in MOLES, it can conserve mass because molecular weights of the participating components are known, making mass and moles internally compatible.

Example 3: Use of CONVERT, with GAMMA sub-keyword and parameters.

CONVERT EOS6, GAMMA INF1 OUTF1, FILE GAM1 SHAPE 1.5, BOUND .9, AVE 1.0, ORIGIN 1.0

In this example:3, the user instructs the program to CONVERT input stream complying to the characterization ‘EOS6’ (which contains INF1 as one of its components) to the “current” (which contains OUTF1 as one of its components). Neither FROM, TO or CONSERVING units are specified so they all default to MOLES. GAMMA distribution is to be used for the conversion. The program will select INF1, and heavier (MW-wise) input components to fit to the model (i.e. used to calculate model parameters). All four parameters are listed with a single argument each. Hence regression is not used. It is probable that the input stream contains a fluid, a sample of which had been previously fit to the Gamma distribution model resulting in these values. The program will then select OUTF1 and heavier output components (MW-wise), and use their entered molecular weights and the model parameters to calculate molar amounts for each, while conserving the overall MOLES of the stream.

Example 4: Use of CONVERT, with GAMMA and SPLIT.

CONVERT "6-COMPONENT" GAMMA X3 C7 SHAPE 1.0 0.5 5.0 BOUND 0.7 0.5 1.0 AVERAGE 1.0, ORIGIN 1.0 SET PRESSURE 423 BAR SPLIT X1 CO2 0.03514 0.00462 0.84342 0.11682 SPLIT X2 C3 0.50204 0.07055 0.19427 0.05624 0.08078 0.09611 SET PRESSURE 373.3 BAR SPLIT X1 CO2 0.03459 0.00485 0.84757 0.11299 SPLIT X2 C3 0.51820 0.07066 0.19165 0.05460 0.07709 0.08779 SET PRESSURE 318.2 BAR SPLIT X1 CO2 0.03397 0.00492 0.85170 0.10941 SPLIT X2 C3 0.53182 0.07159 0.19091 0.05227 0.07273 0.08068

In this example:4, a conversion from a characterization named ‘6-COMPONENT’ to the current (having a component named C7, and other 2 named CO2 and C3) is being defined. Input components heavier than X3 are instructed to participate in Gamma distribution fit, and output components C7 and heavier would obtain amounts based on the calculated model. Regression will be performed to calculate the model parameters, but the parameters AVERAGE and ORIGIN are fixed. SHAPE would initially have a value of 1.0 and it can vary between 0.5 and 5.0 while regression is being performed. BOUND would initially have a value of 0.7 and can vary between 0.5 and 1.0. Input and output streams are expected to be in molar units and MOLES will be conserved during the conversion.

The example:4 also specifies that pressure dependent SPLIT factors determine the partitioning of input components X1 and X2. 3 pressure nodes are specified and at each, X1 partitions into CO2 and 3 consecutively named output components while X2 partitions into C3 and 5 further consecutive output components.

Example 5: Use of the new WEIGH sub-keyword to CONVERT.

GAMMA C7 C7+1 IGNORE C15 WEIGH C15 0.5 WEIGH C16 0.2 WEIGH AVERAGE

This example:5 considers the conversion of a 14-component input "plus" fraction (C7, C8, C9, ..., C19, C20+) into an output "plus" fraction beginning with C7+1. The amounts (moles or mass) of C15 through C20+ will be ignored. The C7 through C14 molecular weights will be weighted by 1.0, the C15 MW will be weighted by 0.5, the C16 MW will be weighted by 0.2, the C17 through C20+ MWs will be weighted by 0.0, and the distribution's average MW will be weighted by 1.0.

When a GAMMA distribution is fit to an input "plus" fraction consisting of n components, there are n + 1 molecular weights that can act as data to be matched; the molecular weight of each component and the average molecular weight of the entire "plus" fraction. Each of these molecular weights can now be weighted separately (by its own WEIGHTING command). Without an overriding WEIGHTING command, the default weight factor is 0 for (a) the MW of the heaviest component in the "plus" fraction, (b) the MW of each IGNORED component, and (c) the average MW of the entire "plus" fraction. For the MW of any other component, the default weight factor is 1. See Example 6 for a clarifying usage. The new default weight factors are the same as the hard-wired weight factors in the previous version of Streamz, with one important exception. Unless the GAMMA command's IGNORE option was in effect, Streamz 1.01 weighted the "plus" fraction's average MW by Max(n-1,1) instead of by 0 (the new default). That's the only difference, and it arises only when the IGNORE option is NOT used. The reason for the change was to completely remove the influence of the heaviest component's MW (which is usually quite uncertain) on the default GAMMA fitting. This was not the case when the average MW was weighted.

[IF filter_name]

[WEIGHT [BY|OVER] var_spec [AND var_spec]...]

[OVER var_spec [AND var_spec]...]

[NORMALIZE]

[SCALE value]

[TO file_nickname [TO file_nickname|AND file_nickname]...]

The COPY command initiates a read/convert/write operation of streams from all open input stream files to all open output stream files (unless overridden by the TO option). Any conversion required during the operation based on the characterizations associated with those files, are performed automatically.

Sub-keywords and options modify the action of the COPY command.

| Sub-Keywords | ALIAS |

| IF | |

| NORMALIZE | NORM |

| SCALING | SCALE, SCAL |

| AND | |

| WEIGHT OVER |

This option followed by the name of an earlier defined filter (using the FILTER command) results in the input stream being processed through it before being converted and written to the output stream file.

This keyword results in conversion of amounts of the output stream into fractions. If the input stream is in molar quantities, the output will be in molar fractions.

SCALING sub-keyword multiplies the output streams by a factor. This can be used for conversion from one set of units (eg moles/day) to another (moles/hour) if the output streams are required in a particular unit by the program using it. If SCALE 100 is used with the NORMALIZE command, the output streams are written in percentage instead of fractions.

This sub-keyword can be used to specify multiple var_spec to the WEIGHT and OVER options. var_spec is the name of a numerical VARIABLE or DOMAIN, followed by its desired units, if applicable. It can also be used to specify multiple output file_nicknames, instructing the COPY command to write to only a subset of the open output stream files.

When used in the COPY command, the converted stream is NORMALIZED (if requested) and then multiplied by Scale*Product(Weight-Vars)/Product(Over-Vars). Division by zero will never occur. If any Over-Var (defined below) is zero, the resulting stream will normally be set to zero, unless the same variable is also used as a Weight-Var (again, defined below). In that case, the Over-Var and Weight- Var will cancel (except for the ratio of their units, if applicable), thereby avoiding the zero-divided-by-zero condition. Hence it first applies a product of the weigh variables to each converted stream after normalization (if requested). The stream is then multiplied by the value of SCALE (if used) to give the final stream. The argument to this command is one or more variables or domains (including units if applicable). Multiple weigh variables must be separated by the AND keyword. An optional BY keyword is allowed if the name of the weigh variable happens to be "over". It is also possible to use the OVER keyword in conjunction with the WEIGHT keyword to mean a combined WEIGHT OVER option using the same variables for both options. This is a typical usage of these options when portions of the original stream are affected due to use of the FILTER command.

A "Weight-Var" is the value of a variable or the size of a domain (upper variable minus lower variable) requested by the WEIGHT option. The values or sizes are calculated in their requested units (if applicable) before any variables might be altered by filtering. This allows rates to be converted correctly to cumulative amounts, for example. Any undefined Weight-Var is assumed to be zero.

An "Over-Var" is the value of a variable or the size of a domain (upper variable minus lower variable) requested by the OVER option. Variable values are calculated in their requested units (if applicable) before any variables might be altered by filtering, but domain sizes are calculated in their requested units (if applicable) after the COPY command's filter has altered any necessary variables. This allows cumulative amounts to be converted correctly to rates, for example. Any undefined Over-Var is assumed to be zero.

Example 1: Simplest use of COPY command without any options

COPY

In this example, all streams from all open input stream files are copied to all open output stream files. Conversion is automatically performed whenever the characterizations of the two files are different.

Example 2: Advanced use of COPY with FILTER, DEFINE, and WEIGHT OVER

DOMAIN TIME T1 T2 DEFINE T1 0.0 DEFINE T2 1.0 FILTER YEAR: TIME GE ?T1? YEARS AND TIME LE ?T2? YEARS COPY IF YEAR, WEIGHTING OVER TIME (DAYS)

This example assumes that time variables T1 and T2 are associated with each stream. It declares a domain called ‘TIME’ made up of T1 and T2. It then associates the wildcards T1 and T2 with the string 0.0 and 1.0 respectively. A filter called ‘YEAR’ is defined with the criteria as the interval 0.0-1.0 years. All streams satisfying the previous defined filter called ‘YEAR1’ are converted, the domain ‘TIME’ for the “current” input stream (i.e. T2 –T1 for this stream) is calculated and multiplies each stream quantity. The requested domain (i.e. the domain of the resulting stream) is calculated and divides the stream. This gives us back rates after starting with rates. The formula applied is:

Final_Stream = (TIME domain for each stream before filtering)*Stream) / (TIME domain after filtering)

Example 3: Use of COPY with DEFINE, FILTER, TO and SCALING options.

DEFINE WELL ‘P5010’ FILTER WELL WELL EQ ‘P5010’ STREAMFILE HRLY OUTPUT ?WELL?_HOURLY.STR COPY IF WELL TO HRLY, SCALING_BY 0.041666667

This example first associates the token WELL with the string ‘P5010’. It then defines a filter (also called WELL) which is satisfied if the WELL variable (assumed to be associated with streams in input stream files) has the value ‘P5010’. The STREAMFILE command opens a file with a name made up of the replacement string for ?WELL? (P5010), and the string ‘_HOURLY.STR’, and associates the nickname “HRLY” with it. The COPY command then instructs to write all streams for which the filter holds true to the file with nickname “HRLY”, multiplying the stream with the factor 0.041666667 (to convert daily production to hourly) before doing so.

(see also: ALIAS Table for alternative keyword)

The DEFINE command is used to associate a token with a replacement string. This command allows a run-time replacement of any occurrence of the token, surrounded by question marks, with its associated string. All replacements will occur before any other parsing of an affected input line. The definition will persist throughout the rest of the driver file in which it is issued, carrying over into INCLUDE files as well. It will not carry back into a parent driver file, however. Used by itself, the DEFINE command allows use of generic Streamz driver files on multiple cases, just by changing the replacement string for the token at one place.

In conjunction with the INCLUDE command, the DEFINE command offers a very powerful utility for execution of the same set of generic instructions on the same token after it gets redefined (see the Get Started with Streamz? for an example).

Example 1: Simple use of DEFINE

DEFINE CASE ‘EOS6’ TITLE ‘BO TO COMPOSTIONAL (?CASE?) CONVERSION’ INCLUDE ?CASE?.CHR STREAMFILE INP1 INPUT BO.STR INCLUDE ?CASE?.CNV STREAMFILE OUT1 OUTPUT ?CASE?.STR COPY

In this example, a token (CASE) is defined and set equal to ‘EOS6’. Before the TITLE command is parsed, the replacement of ?CASE? with 'EOS6' (without quotes) occurs. The boxed title in the output file will have 'EOS6' within brackets instead of the original ?CASE?. Similarly the two INCLUDE commands include the file EOS6.CHR, and EOS6.CNV and the STREAMFILE command opens the file CASE6.STR for output.

This keyword defines a special variable of called name comprising of two existing normal VARIABLEs of the same type var1 and var2. It is used to specify an interval between these normal variables. If these two normal variables are associated with a stream, the DOMAIN is also associated with it automatically. The value of the DOMAIN for a particular stream, depends on the values of the associated variables var1 and var2. It is equal to the upper variable minus the lower variable.

The utility of this keyword is for selecting portions of streams by using DOMAIN in FILTER. For example, assume that time variables T1 and T2 are defined and associated with each stream. These could be start and end times of a time-step in a simulation. Suppose a FILTER is defined to be true if a DOMAIN, comprising of T1 and T2, is greater than 1 year and less than 2 years. This is the same as saying that the lower bound of the interval (T1) is greater than 1 year and the upper bound of the interval (T2) is less than 1 year. Once defined, only the portion of the stream satisfying the interval will be selected for processing by the COPY, COMBINE, TABULATE etc. commands. For COMBINE command the value of the DOMAIN for each stream also needs to be calculated for WEIGH and OVER options.

DOMAIN may be made up of any type of VARIABLE, but both must be of the same type. It is useful whenever a portion of the stream is to be selected based on an interval of defined and associated variables.

Example 1: Use of DOMAIN

DOMAIN TIME T1 T2 FILTER YEAR1 TIME GE 0 (YEAR) AND TIME LT 1 (YEAR) COPY IF YEAR1

In this example, a time DOMAIN ‘TIME’ is defined, comprising of ‘TIME’ variables T1 and T2. A FILTER ‘YEAR1’ is next defined where the interval is between 0 and 1 year. The COPY command converts only those streams.

Example 2: Use of DOMAIN

VARIABLE CONN_I INTEGER VARIABLE CONN_J INTEGER VARIABLE CONN_K INTEGER DOMAIN I_DIR CONN_I CONN_I DOMAIN J_DIR CONN_J CONN_J DOMAIN K_DIR CONN_K CONN_K FILTER BLOCK_A I_DIR GE 1250 AND I_DIR LE 1500 AND J_DIR GE 250 AND J_DIR LE 1000 AND K_DIR GE 100 AND K_DIR LE 2500 COPY IF BLOCK_A TO BLKA

In this example, the integer VARIABLEs are defined to implement grid blocks in a reservoir simulator. 3 DOMAINs each in I, J, & K directions are declared. A FILTER called ‘BLOCK_A’ is next defined to select intervals in each DOMAIN. The COPY command converts only those streams and writes to a specified file.

This keyword instructs the program to write out all the lines read from input (driver) file to the Standard Output (log) file. Normally Streamz only writes back certain information important to the run, some information about its execution, warnings and errors. By use of this keyword with the ON option (or without any options which means the same as the ON option) the user forces the program to write out all lines read from the input (driver) file. The written lines also contain information about the line number in the input file.

Use of the keyword with the OFF option suspends the writing back to the read line for the rest of the execution of the program, unless instructed once again by use of the ON option. The program initially starts with the ECHO OFF command in effect, without explicitly being specified.

Example 1

ECHO . . . ECHO OFF . . .

In this example, an ECHO command is issued trigerring the echoing of all line from the input (driver) file. Without any arguments it is interpreted as ECHO ON. Later in the data set an ECHO OFF command turns off the echoing for the rest of the execution of the program.

This keyword declares the END of the scope of the current primary keyword. When a primary keyword is in effect some sub-keywords and options may be recognized within its context. This keyword explicitly tells the program that no further sub-keywords to the current primary keyword is expected and the program should expect a primary keyword.

One particular use of this keyword is to signify the END of the tablular input of EOS property table, triggered with the COMP primary keyword, as well as for the BIPS table. Without the use of END, a blank line is needed to end these tables.

Another use is when an INCLUDE file is used for some particular purpose (e.g. CHARACTERIZATION or CONVERT). It is recommended to end the file with the END keyword. Otherwise the Standard Output (log) file may contain information about returning to the parent file before echoing some information read from the included file.

A third use is immediately after the STREAMFILE command. This command looks for some sub-keywords within the context of this command before actually opening the file. When it encounters INCLUDE command it thinks these sub-keywords may be present in the included file. If the argument to the INCLUDE command contains path information, the program changes the ‘current’ directory to this path. If, in the included file, the program then encounters a primary keyword, the scope of the STREAMFILE keyword ends automatically and the file specified is opened.. The user can help the program by explicitly specifying the END of the primary STREAMFILE command.

Thus the use of the END keyword is recommended to avoid such side effects.

Example 1: Use of END to the end EOS property table input

COMP MW C1 44 C4 74 C10 128 END . . .

Example 2: Use of END to end the STREAMFILE command

STREAMFILE INP1 INPUT INPUT.STR END

This keyword declares the end of file to the program. The program can proceed with execution ignoring the rest of the information written after EOF keyword.

This is a useful keyword if the user has a large data-set, but wants only to execute an initial portion of it. If the EOF keyword is inserted at the particular point, the rest of the data-set need not be deleted.

The use of this keyword is the only way an end of file can be conveyed to the program if the Primary Input (driver) file has been defined to be the keyboard (by cancelling the prompt). In such cases the user enters commands from the keyword line by line. The program will continue to prompt for the next line till the EOF keyword is encountered. It then sends all the input lines to the program as if they came from the input file.

Example 1: Use of EOF to specify the end of file.

TITLE ‘EXAMPLE OF EOF’ COMP MW C1 44 C4 74 C10 128 END . . . ;SOME COMMANDS . . . EOF ; OTHER COMMANDS WHICH ARE NOT EXECUTED DUE TO EOF COMMAND ABOVE . . .

(see also: ALIAS Table for alternative keywords)

This keyword declares the equation of state to which the next characterization applies. Each characterization has to have an EOS associated. The allowed equations of states are listed later in this section.

The argument to this keyword specifies the EOS to be associated. The default (modified Peng-Robinson 1979; PR) is associated if none is specified.

This keyword is required if an EOS calculation is one of the task to be performed by STREAMZ. Current version of STREAMZ does not require it and is recognized for completeness and forward compatibility.

The following EOSes are recognized:

| RK | : | Original Redlich-Kwong EOS |

| SRK | : | Soave-Redlich-Kwong EOS |

| PR77 | : | Original Peng-Robinson EOS |

| RK | : | Modified Peng-Robinson EOS (default) |

Example 1: Use of EOS before a CHARACTERIZATION definition

EOS SRK CHAR EOS3 COMP MW C1 44 C4 74 C10 128 END

In this example, the Soave-Redlich-Kwong EOS (SRK) is specified and is associated with the "EOS3" characterization.

(see also: ALIAS Table for alternative keywords)

[[AND|OR][NOT] fltr_name | fltr_construct]...]

This keyword defines and names a filter (a set of criteria which must be true for it to be satisfied). The first argument to this keyword is the name of the filter being defined (fltr_name). Multiple (at least one) arguments may follow, each either a previously named filter (using previous FILTER commands) or a filter construct, each separated by the sub-keywords AND, OR, or NOT. The syntax of a fltr_construct is: var_name op var_value. The var_name is a previously defined VARIABLE or DOMAIN. Additionally it can also be a lumped fraction defined using the LUMP command. The op is one of the allowed operators (see table 2), and var_value is the value of the var_name. If the var_name is a lumped fraction, the var_value is calculated based on the value of the quantity for each stream.

The utility of this keyword is the ability to use the named filters in other manipulation commands (COPY, COMBINE, TABULATE etc.) and select only portions of the input streams before applying the actual manipulative command. Specifically, it checks the values of variables associated with streams. Based on all the values of variables (and/or domains) which form part of the filter, and their evaluations (to either true or false), whole or part of the stream will be selected for the appropriate action (COPY, COMBINE, TABULATE etc.).

A filter is not predefined to be either true or false, but is evaluated at run time for each stream. The evaluations start from the base filter. For multiple filters and/or filter constructs forming a new filter, the evaluation is done from left to right on left-hand side (LHS) and right-hand side (RHS) pairs separated by the sub-keywords AND or OR. If the sub-keyword used is NOT, only the RHS of the filter/construct is considered for evaluation. Normal logical results based on the table 1 are returned for each such evaluation:

Table 1: Logical table of filter/construct for evaluation.

| LHS filter/construct | Sub-Keyword | RHS filter/construct | Result of evaluation |

| True | AND | True | True |

| True | AND | False | False |

| True | OR | True | True |

| True | OR | False | False |

| NOT | True | False | |

| NOT | False | True |

Once each filter/construct has evaluated to either true or false, the next on the right is paired and evaluated. Evaluation of NOT sub-keyword with its RHS is done before the pairing, if applicable. Use of AND results in a shrinkage of the portion of the stream. Use of OR results in an expansion of the portion of the stream selected. Use of NOT results in that part of the stream to be selected which does not satisfy the filter. Use of NOT is not permitted with filters containing domain variable as their part.

Table 2: Valid operators (op) used in filter constructs (fltr_construct).

| Operator | Meaning | Usage with types |

| GT | greater than | Variables / Domains |

| GE | greater than or equal to | Variables / Domains |

| LT | less than | Variables / Domains |

| LE | less than or equal to | Variables / Domains |

| EQ | equal to | Variables / Domains |

| NE | not equal to | Variables / Domains |

| SW | starts with | String Variables |

| EW | ends with | String Variables |

| CN | contains | String Variables |

| AND | logical and | Variables / Domains |

| OR | logical or | Variables |

| NOT | logical not | Variables |

The use of the first 6 (GT to NE) operators with string variables will be evaluated alphabetically based on the position in the ASCII character-set. Hence a filter specified as FILTER a-wells well GE ‘A’ AND well LT ‘B’’ will result in all streams with a variable named well and having only the values starting with the letter ‘A’ being selected.

Example 1: Simple use of single filter.

FILTER A-WELLS WELL SW ‘A’ COMBINE A-WELLS IF A-WELLS

In this example, a FILTER named ‘A-WELLS’ is defined to select those streams with an associated VARIABLE call ‘WELL’ (presumably of type STRING) having any value that starts with the character ‘A’. The actual action is performed by the COMBINE command after the filter selects (IF A-WELLS) the proper streams.

Example 2: Use of multiple filter constructs.

FILTER A.W.PRODS WELL SW ‘A’ AND WELL CN ‘WEST’ AND FLOW GT 0.0 COPY IF A.W.PRODS TO A-WESTERN-PRODUCERS

In this example, a FILTER named ‘A.W.PRODS’ is defined to select those streams with an associated VARIABLE call ‘WELL’ (presumably of type STRING) having any value that starts with the character ‘A’, contains the string “WEST”, and a variable “FLOW” (of REAL type) with a value greater than 0.0. A COPY command writes all such streams satisfying this filter (IF A.W.PRODS) to an output stream file with the nickname ‘A-WESTERN-PRODUCERS’.

Example 3: Use of previous filter definitions in new filter.

LUMP TOTAL 6*1 FILTER VALID TOTAL MOLES GT 0.0 FILTER FC GROUP EQ ‘FIELD CENTER’ FILTER SP GROUP EQ ‘SOUTH PLATFORM’ FILTER FIELD OFC OR SP AND VALID TABULATE GROUP AND TIME (DAYS) IF FIELD TO FIELD_PROD

The example assumes that the characterization of the input streams contains 6 components. A lumped fraction called ‘TOTAL’ is defined to contain the whole of each of the 6 components. A FILTER named ‘VALID’ is defined to select those streams where the MOLES property of the lumped fraction (in effect the total moles of the stream) is greater than 0.0. This filters out those streams where there has been no production. A FILTER called ‘FC’ is defined to select streams having an associated VARIABLE called ‘GROUP’ (presumably of type STRING) with a value equal to “FIELD CENTER”. Another FILTER called ‘SP’ is defined to select streams having the same VARIABLE called ‘GROUP’ with a value equal to “SOUTH PLATFORM”. A new filter ‘FIELD’ uses all 3 previous filters with an OR and an AND sub-keyword. A TABULATE command converts and write all such streams satisfying this filter (IF FIELD) to an output stream file with the nickname ‘FIELD_PROD’. The ‘GROUP’ and ‘TIME (DAYS)’ option to this command also results in summing all consecutive streams where these variables do not change into aggregates and result in a tabulated file.

(see also: ALIAS Table for alternative keywords)

The keyword associates a file_nickname with an actual file on disk and opens the file for output. This file receives Gamma distribution modeling results for the conversion where the same file_nickname is specified (using the FILE option to the GAMMA command). The first argument to the GAMMAFILE command is the file_nickname, the second identifies the purpose by means of an option (OPEN or CLOSE), and the third identifies the actual file on disk. This last argument might include some OS specific path directives to specify a file on a directory other than the current. This last argument can also be the PROMPT keyword which would prompt the user for the name. This allows a means of interaction to the program.

Example 1: Use of GAMMAFILE

Convert ‘In_Char’ Gamma InF1 OutF1, File GAM1 . . . GammaFile GAM1 Open Gammafit.out . . . Copy

In this example, a conversion is defined from a previously defined input CHARACTERIZATION named ‘In_Char’ to the “current” characterization. The CONVERT primary keyword will use GAMMA distribution model and the input component ‘InF1’ and heavier and the output component ‘OutF1’ and heavier will participate. The optional sub-command FILE to the GAMMA sub-keyword specifies a nickname ‘GAM1’. All GAMMA fitting results will be written to the file pointed to by this nickname. Further on in the data-set a GAMMAFILE command with the OPEN option links this nickname (GAM1) with an actual file ‘Gammafit’. The COPY command would result in writing the gamma fitting results to this file when it initiates the read/convert/write operation, in addition to writing out to any stream file(s).

(see also: ALIAS Table for alternative keywords)

This keyword instructs Streamz to include contents of a file. The argument to this keyword file_spec, is the name of the file. This may contain operating system specific path directives if required. The effect is essentially the insertion of the contents of the pointed file at the location of this keyword.

This keyword allows the development of modular input (driver) file for Streamz. CHARACTERIZATION and CONVERTers are recommended to be in INCLUDE files because they are likely to remain constant over many runs. Some generic TABULATIONS may also benefit from being in INCLUDE files.

In conjunction with the DEFINE command, the INCLUDE command offers a very powerful utility for execution of the same set of generic instruction on the same token after it gets redefined (see Getting Started With Streamz for an example).

Example 1: Simple use of INCLUDE

TITLE ‘BO TO COMPOSITIONAL EOS6 CONVERSION’ INCLUDE BO.CHR STREAMFILE INP1 INPUT BO.STR INCLUDE EOS6.CNV STREAMFILE OUT1 OUTPUT EOS6.STR COPY

This example is a simple, concise, yet complete Streamz input file. A TITLE command declares the intention of the run. An INCLUDE command instructs Streamz to include file ‘BO.CHR’ (which contains the black-oil CHARACTERIZATION). A STREAMFILE command opens the file ‘BO.STR’ for input and associates the nickname ‘INP1’ with it. Another INCLUDE command instructs Streamz to include the file ‘BO.CNV’ (which contains output CHARACTERIZATION and the complete CONVERT command). A stream file ‘EOS6.STR’ is opened and the COPY command converts all streams from the input to the output stream file.

This keyword defines a lumped fraction from among the components making up the current characterization and gives it a name. The first argument, lum_name to this keyword is the name of the lumped fraction. Multiple arguments may then follow, each in the form of a doublet of component names (or previous lump_names) and amounts. The idea is to define how much of each component makes up the LUMP. Both elements of the doublet are optional. If the component name is omitted, it defaults to the one following the previous (with the first defaulting to the first named component in the characterization definition). If the amount is omitted, it defaults to 1.

This named fraction is then available to the program for use much in the same manner as are variables defined by the VARIABLE command. It can be used with the SET command in CONVERT definitions, allowing it to be used as a control variable. They are not required to be SET in stream files because Streamz calculates the lumped fraction property on the fly as the streams are being read by a command resulting in a read/convert/write operation.

Lumped fractions can also be used while defining a FILTER. They can be specified on the LHS of a filter construct with the chosen property. If the current value of the lumped fraction’s specified property (e.g. the mole fraction of C7+ fraction of the current stream) makes the filter construct evaluate to true, the filter is satisfied and the stream is selected for proper action (e.g. COPY, COMBINE etc.).

The “property” mentioned in previous paragraphs is one of the six allowed properties for the lumped fraction:

| Property | Meaning |

| VOLUME | Volume of the lumped fraction made up by combining the volumes of the components contributing to it. |

| AMOUNT | Amount of the lumped fraction made up by combining the amounts of the components contributing to it. |

| VOLUME/VOLUME | Volume of the lumped fraction made up by combining the volumes of the components contributing to it divided by the total volume of all the components in the stream. Same as volume fraction. |

| AMOUNT/AMOUNT | Amount of the lumped fraction made up by combining the Amounts of the components contributing to it divided by the total Amount of all the components in the stream. |

| MW | Molecular weight of the lumped fraction. |

| MOLES | Moles of the lumped fraction made up by combining the moles of the components contributing to it. |

| MOLES/MOLE | Moles of the lumped fraction made up by combining the moles of the components contributing to it divided by the total moles of all the components in the stream. Same as mole fraction. |

| MASS | Mass of the lumped fraction made up by combining the masses of the components contributing to it. |

| MASS/MASS | Mass of the lumped fraction made up by combining the masses of the components contributing to it divided by the total mass of all the components in the stream. Same as mass fraction. |

| MOLES/MASS | Moles of the lumped fraction made up by combining the moles of the components contributing to it divided by the total mass of all the components in the stream. |

| MASS/MOLE | Mass of the lumped fraction made up by combining the masses of the components contributing to it divided by the total moles of all the components in the stream. |

The volume, mass and moles mentioned in the definition of the above properties are generic. The actual units of streams (e.g. MSCF/D, kmolr/D, gm/cc, lbmol etc.) are irrelevant to Streamz, but must be kept track of by the user.

It must be emphasized that the lumped fraction is not same as pseudoization (or lumping) of components. No lumped critical properties are generated as a result of this command. This feature only provides a means of manipulating a stream based on its compositional property (e.g. mole fraction, mass, volume etc.).

Example 1: Use of LUMP for conversion control variable.

TITLE ‘EXAMPLE USE OF LUMP COMMAND’ CHAR 6-COMP NAME X1 X2 X3 F1 F2 F3 LUMP C7P 1 F1 F2 F3 STREAMFILE INP1 INPUT 6C.STR INCLUDE EOS17.CHR STREAMFILE OUT1 OUTPUT EOS17.STR CONVERT 6-COMP FROM MOLES TO MOLES CONSERVING MOLES SET C7P MOLES/MOLE 0.10 INCLUDE SPLIT.010 SET C7P MOLES/MOLE 0.06 INCLUDE SPLIT.006 SET C7P MOLES/MOLE 0.02 INCLUDE SPLIT.002 COPY

In this example, a TITLE command declares the intention of the run and then a CHARACTERIZATION named ‘6-COMP’ is defined. Then a LUMP fraction called ‘C7P’ is defined to consist of the whole (i.e. 1) of the last 3 components. The amount element of the doublet is only mentioned for the first component (F1), others also defaulting to 1. A STREAMFILE containing the streams in this characterization is opened for input The file ‘EOS17.CHR’ (containing a 17 component EOS characterization) is included (INCLUDE command). A stream file ‘EOS17.STR’ is opened for output. The CONVERT command specifies the input characterization and the input / ouput / conserve units. The MOLES/MOLE property of the lumped fraction C7P is set to 0.10 and the files ‘SPLIT.010’ (containing SPLIT factors at this value of moles/mole of C7P) is included. The MOLES/MOLE property of ‘C7P’ is changed twice, each time including the relevant SPLIT factors. Finally the COPY command initiates the read/convert/write action.

Example 2: Use of LUMP in FILTER.

. . LUMP C7P 1 F1 F2 F3 . . FILTER RICH C7P MOLES/MOLE GE 0.05 FILTER LEAN C7P MOLES/MOLE LE 0.01 COPY IF RICH TO RICH COPY IF LEAN TO LEAN

Only the relevant lines are shown in this is example. The same input CHARACTERIZATION as in the previous example is assumed. A lumped fraction called ‘C7P’ is defined to consist of the whole (1) of the last 3 components. Two filters are defined, the first named ‘RICH’ where the MOLES/MOLE property of the lumped fraction C7P is specified to be greater than or equal to 0.05. The second is named ‘LEAN’ with the MOLES/MOLE property of the lumped fraction C7P being less than or equal to 0.01. Two COPY commands direct converted streams to be written TO relevant files (specified via nicknames), selecting only the streams that satisfy the respective filters.

This keyword allows the creation of named streams by adding previously named streams and/or components. The first argument (dest_stream_name) to this command is the name of the stream being created. Multiple arguments can follow, each a triplet consisting of

1) the name of the stream or component being mixed,

2) the amount and

3) the unit.

The destination stream will be assigned to the current characterization.

The MIX command will read in and execute all the user's instructions for constructing named streams (from raw components and/or existing named streams). The mix command stores the fluid streams and keeps track of how many volume, amount, moles or mass units of each component they contain.

The MIX command handles a wide variety of ways the users might want to input their data. The input can occupy any number of lines (including blank lines), ending only when something is encountered that doesn't fit the pattern. The created stream nickname dest_stream_name can be almost any string of characters, as long as it doesn't contain delimiters (e.g., blanks, tabs, certain punctuation), is not numeric, is not a component name, and won't match any of the unit keywords (see below). A series of optional arguments in the form of triplets or ingredients follows the mandatory dest_stream_name. A triplet consists of 1, 2, or all 3 of the following pieces of data, in any order: